Empathy over Algorithms: Why the Future of Global Cooperation is Human-Centered

Engaging the Human Factor in a Tech-Driven World

In a world enthralled by artificial intelligence and digital solutions, it’s tempting to imagine that better algorithms and data systems could solve our greatest global challenges. From climate change models to pandemic tracking apps, technology now permeates international efforts. Yet as geopolitical fractures widen, a crucial truth is coming into focus: no high-tech tool can substitute for the human qualities that truly bridge nations; empathy, trust-building, and relational intelligence. The future of international cooperation must prioritize human capabilities over purely technological solutions, using tech as support rather than a panacea. This isn’t a rejection of technology’s value; it’s a recognition that people, not processors, ultimately negotiate peace, build trust, and adapt to change.

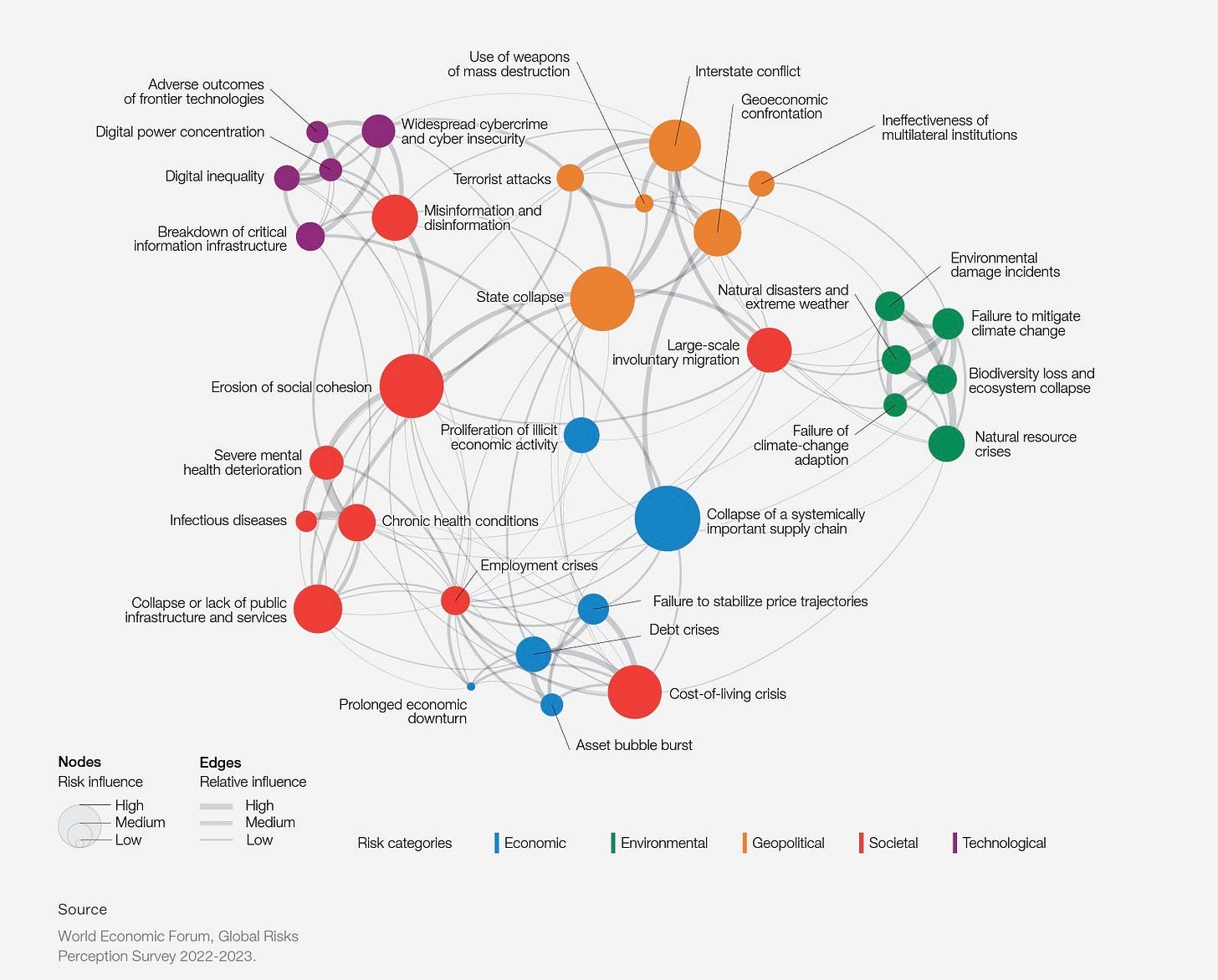

But we must also go deeper: the crises we face today often point not only to discrete policy failures or technical gaps, but to a more fundamental breakdown, a crisis of worldviews, collective meaning, and institutional assumptions. This is what many thinkers describe as the Metacrisis: a systemic unraveling that lies beneath and between the visible emergencies. To respond effectively, global cooperation must engage not only tools and treaties, but the underlying human capacities for sense‑making, shared values, resilient systems, and imaginative adaptation.

The Lure and Limits of Tech-Driven Solutions

In recent years, global governance has eagerly embraced digital innovation. The United Nations, for example, has launched a new Digital Cooperation Portal leveraging AI to map initiatives worldwide. This portal can connect stakeholders working on issues from inclusive digital economies to AI governance, helping them coordinate across borders. World leaders also agreed in 2024 on a Global Digital Compact, a set of principles for an “open, free and secure digital future for all” anchored in human rights. These efforts reflect a broad hope that better tech connectivity and data-sharing can patch the fractures in our international system.

Technology certainly has a role in easing cooperation. Data networks let diplomats consult experts instantly across time zones. AI-driven analysis can sift through vast intelligence to inform policy. During the COVID-19 pandemic, digital platforms enabled virtual summits when travel shut down, keeping dialogue alive. And in climate negotiations, one of the most complex diplomatic arenas, machine learning models now help project the global ripple effects of each country’s carbon pledges. Such AI systems act as real-time simulators, showing stake holders the potential trade-offs and unintended consequences of policy options. Armed with these insights, negotiators can approach talks with greater clarity.

Yet for all its promise, a tech-centric approach has stark limitations. International cooperation falters today not from a lack of data or analytic power, but from deficits of trust and understanding. No algorithm can magically resolve the “us vs. them” mentalities or heal the mistrust between powers. In fact, technology can just as easily exacerbate fragmentation: consider how social media platforms, once heralded as tools to connect the world, have also fueled misinformation and polarization across societies. The digital divide is another sobering reality. Even as advanced networks spread, roughly one-third of humanity (about 2.7 billion people) remain offline, unable to access basic internet services. Fancy AI-driven platforms mean little if entire regions lack connectivity or the institutional capacity to use them. Technological solutions tend to reach those who are already ahead, potentially widening gaps unless we invest in inclusive access and digital literacy.

Most importantly, technology lacks the human touch needed to forge genuine cooperation. Nations can share meteorological data and run joint simulations, but these tools don’t negotiate compromises, people do. As one diplomatic scholar put it, “even in this digital era, empathy, trust, and human judgment remain irreplaceable”. High-tech tools might analyze patterns or monitor compliance, but they cannot feel compassion or creatively bridge a cultural misunderstanding. History shows that lasting international agreements, from post-war reconciliations to arms control pacts, depend on leaders seeing the humanity in their counterparts, not just the cold facts on a screen.

AI on the Global Stage: Powerful Support, Not a Peacemaker

Few technologies illustrate both the potential and pitfalls of tech-driven diplomacy better than artificial intelligence. AI is already quietly entering diplomatic realms. A recent investigation by NPR revealed that institutions like the U.S. Center for Strategic and International Studies are experimenting with AI platforms (think advanced language models akin to ChatGPT) to draft elements of peace agreements, prevent nuclear escalation, and even monitor ceasefires. Across the Atlantic, the UK government has explored AI for scenario planning in negotiations, and researchers in Iran are studying similar applications. In the climate field, as noted, UN climate negotiators use AI to gauge the systemic impacts of different emissions pledges. And in conflict zones like Ukraine, AI-enhanced monitoring is being tested to impartially track ceasefire violations via satellite imagery and real-time data, potentially offering a neutral check when trust between warring parties is thin.

These examples show AI’s value as a diplomatic tool. Proponents argue that algorithms can crunch numbers and scan historical records far faster than any human, spotting patterns and options that negotiators might miss. An AI assistant could, for instance, alert envoys to past peace deals with analogous conditions, or instantly translate public opinion data into insights for public diplomacy. In theory, this data-driven boost could make negotiations more rational and less prone to human error or emotion. Why grope in the dark for a compromise if an algorithm can illuminate a mutually beneficial solution hidden in the data?

But diplomacy is not an engineering problem, and treating it as such can be dangerous. Seasoned diplomats remind us that the hardest conflicts persist not due to a lack of optimal solutions, but because of intangible human factors. As a commentary from the USC Center on Public Diplomacy observed, “the most difficult negotiations hinge on trust, cultural nuance, and unspoken communication that algorithms may struggle to recognize, let alone replicate”. Peace treaties aren’t mere logical puzzles; they require empathy for an adversary’s pain, the credibility built over shared cups of coffee, and the intuition to know when to press or concede. An AI, however sophisticated, has no gut feeling or moral compass. It might propose a perfectly balanced settlement on paper, yet fail to account for the trauma or pride that real leaders carry into the room.

Over-reliance on AI could even undermine the very trust we seek to build. If negotiators start bringing algorithmic recommendations to the table, counterparts may well ask: whose biases are baked into this code? We already know AI systems can inadvertently amplify biases or reflect the subjective choices of their programmers. In international affairs, where history itself is contested and each side has its own “truth”, an algorithm might unknowingly privilege one narrative over another. Without transparency and human oversight, there’s a risk of ceding moral judgment to machines, potentially eroding accountability. After all, diplomacy ultimately deals in persuasion and legitimacy; a black-box AI spitting out policy advice with no clear rationale is more likely to sow suspicion than consensus.

Encouragingly, most experts do not envision AI replacing diplomats, but rather augmenting them. AI can be a tireless research aide and scenario simulator, a way to expand our analytical horizons, but the decisions must remain in human hands. As one summit session on diplomacy succinctly noted, technology should “strengthen diplomacy, not substitute it”. The key is finding a balance: harnessing AI’s speed and breadth while keeping humans firmly in charge of judgment calls. In practice, that might mean using AI to generate options or flag inconsistencies, then having diplomats apply their wisdom and empathy to choose a path that all sides can accept. The future of international relations will depend on this balance between the algorithm and the human element; ensuring that digital tools serve as support systems for our better angels, rather than as replacements.

Digital Initiatives and the Human Touch in Global Governance

Top international institutions have also leaned into technology as a way to rejuvenate cooperation. Nowhere is this more evident than at the United Nations, where Secretary-General António Guterres, a champion of “digital cooperation”, has argued for boldly harnessing technology to improve global governance. The UN’s new Digital Cooperation Portal is one practical outcome of this push. Launched in December 2025, the portal aggregates hundreds of initiatives across issues like AI governance, inclusive digital economy, and online human rights. It even employs AI algorithms to automatically connect related projects and suggest collaboration opportunities between stakeholders worldwide. In essence, the UN built a high-tech matchmaking and tracking tool for global problem-solvers, a 21st-century upgrade to information-sharing among diplomats, NGOs, researchers, and companies.

There is plenty to applaud in such efforts. By making information transparent and accessible, digital platforms can break down silos among those working on similar challenges. The UN portal, for example, allows a small NGO in Africa working on digital literacy to discover a government initiative in Asia it could partner with. It can track progress in real time and flag gaps that need attention. This kind of connective infrastructure can certainly amplify our cooperative capacity. Likewise, the Global Digital Compact (finalized at the 2024 Summit of the Future) is poised to set shared principles for the digital domain, from closing the connectivity divide to protecting human rights online. If widely embraced, such norms could foster a baseline of trust and responsibility in how nations deploy technology.

Still, even the UN recognizes that effective digital cooperation is not just about new portals or pacts, but about inclusive governance and values. In fact, the UN’s digital cooperation blueprint emphasizes a multi-stakeholder approach, calling for “open, free and secure” digital futures anchored in universal human rights. This implies that improving technology without engaging the people who use and govern it will fall short. The UN’s proposals include strengthening digital education and skills, so that people everywhere can participate, a reminder that human capacity building is as important as fiber optic cables. They also stress developing common norms for digital trust and security, like voluntary codes of conduct for information integrity. Agreeing on norms involves building consensus across cultures and sectors, which is inherently a human political process. And notably, the UN is careful not to create yet another rigid bureaucracy for tech governance. Instead it suggests “agile arrangements” and a flexible Digital Cooperation Forum to convene varied actors and adapt over time. In other words, even our frameworks for using technology in cooperation must themselves be adaptive, networked, and centered on dialogue, not top-down or one-size-fits-all.

Real-world experience bears out the need for human-centric implementation of digital initiatives. Take the challenge of bridging the global internet divide: deploying satellites or 5G networks alone won’t automatically build trust in communities hesitant about outside influence, nor will it ensure women or marginalized groups can equally benefit. That requires on-the-ground engagement, culturally aware policy, and long-term relationship-building. Or consider cyber diplomacy: nations might agree in principle not to attack each other’s critical infrastructure online, but without trust, verified through transparency and communication, those agreements remain paper promises. The International Telecommunication Union (ITU) provides an instructive example. Even during periods of high geopolitical tension, countries with starkly different agendas have cooperated in the ITU to set technical standards and spectrum agreements for the global Internet. Why? Because a degree of trust and mutual interest was cultivated over time among technical experts and diplomats working outside the media spotlight. It’s a reminder that technology agreements stick when there is human rapport behind them.

Perhaps the starkest illustration came during the Cold War. At the height of US-Soviet rivalry, an era with zero digital communication between blocs, the superpowers still struck accords on issues like arms control and scientific cooperation. They negotiated face-to-face through interpreters, often aided by backchannel envoys who built personal relationships. Those breakthroughs weren’t achieved by some advanced analytic engine; they were achieved by human diplomats painstakingly finding common ground despite profound distrust. As analysts have noted, even adversaries found ways to collaborate on existential threats, proving that political will and empathy can overcome fragmentation when it truly matters. It’s a lesson worth heeding: global cooperation ultimately advances at the speed of trust, not just the speed of broadband.

Beyond Techno-Solutions: A Human-Centered Cooperation Framework

So what would a future international cooperation paradigm look like if we put human capabilities front and center? It would certainly leverage the best of our technological tools, we’d be foolish not to use AI for better insights, or digital platforms to include more voices. But the core ethos and methods would revolve around strengthening resilience, dialogue, and adaptive governance. Below is a framework outlining these pillars:

Resilience: Building relationships that endure. A human-centered approach prioritizes resilient bonds between nations and communities, so cooperation can weather crises and political swings. This means investing in trust over time through exchanges, cultural diplomacy, and collaborative projects that bind people together beyond official treaties. Resilience also involves diversifying channels of cooperation: not just government-to-government, but city networks, scientific collaborations, business and civil society partnerships. Multiple connections create redundancy, if one political link frays, others can carry the weight. The eradication of smallpox in 1980 is a classic example: it succeeded because a coalition of governments, WHO experts, and local health workers maintained a shared mission despite Cold War rivalries. When relationships are genuine and multi-layered, they can absorb shocks. In practice, fostering resilience might involve everything from joint training programs for young diplomats (building personal trust early) to twinning cities across divides, or maintaining scientific dialog even when other ties break down. The goal is a web of human connections that hold firm when technology alone cannot.

Dialogue and Sense-Making: Embedding continuous communication and empathy. At the heart of diplomacy lies dialogue, the slow, sometimes painstaking process of listening, understanding, and finding common language. But in a metacrisis context, dialogue must go deeper: it should help societies confront the breakdown of shared narratives and worldviews. A future cooperation framework would double down on dialogue at all levels. This means reinvigorating traditional diplomacy (quiet bilateral talks, UN negotiations, mediators shuttling between sides) with a focus on empathetic listening. It also means embracing new forms of dialogue: virtual town halls that include citizen voices in global issues, or multi-stakeholder forums where governments, tech companies, NGOs, and indigenous leaders all converse as equals. Crucially, dialogue must be inclusive, pulling in perspectives from the Global South, from women and youth, and other often-marginalized voices. When people feel heard and respected, trust follows. As an ethic, dialogue-oriented cooperation values understanding different narratives over imposing one’s solution. Take the example of the UN’s ongoing consultations for the Global Digital Compact, it involved hundreds of organizations and diverse stakeholders to ensure the resulting principles reflect a broad consensus. That process is as important as the product, because it builds buy-in. In short, there is no shortcut or app for trust; it grows through sincere dialogue. International bodies might therefore invest more in convening power, creating safe spaces for conversation (online and offline), than in flashy tech summits that announce grand initiatives without deep consultation.

Adaptive Governance: Staying flexible and learning continuously. The pace of change in today’s world is blistering. Whether it’s AI breakthroughs, emerging diseases, or shifting geopolitical alliances, our cooperation mechanisms must adapt or risk obsolescence. An adaptive governance mindset accepts that no single institutional design or treaty will last forever; what matters is the capacity to evolve rules and structures as conditions change. This is inherently a human capability: the creativity to experiment, the humility to recognize failure, and the collective intelligence to iterate solutions. In practice, adaptive global governance might borrow from the agile methods of the tech world, piloting small cooperative schemes, evaluating results, then scaling up what works (and scrapping what doesn’t). We see hints of this in proposals for “networked” multilateralism, where ad-hoc coalitions tackle specific problems and feed their lessons back into the larger system. The UN’s own vision for the future acknowledges the need for more agile arrangements and “networked collaboration” instead of rigid hierarchies. For example, rather than creating a new bureaucratic agency for AI, an adaptive approach might set up a high-level advisory body to regularly review AI developments and convene different stakeholders as needed. This allows governance to adjust course as we learn more. Similarly, treaties could include sunset clauses or periodic review conferences to update their terms. Adaptive cooperation also means embracing diverse models, sometimes a legally binding treaty is best, other times a voluntary pledge or a coalition of the willing might achieve faster results. The key is flexibility and feedback: mechanisms to monitor global initiatives, share knowledge of what’s effective, and pivot when things aren’t working. Technology can aid this (through better data on outcomes), but again, it’s humans who must interpret and act on that feedback with wisdom.

Putting Humanity at the Heart of Global Cooperation

None of this is to suggest that we roll back the clock to a pre-digital era of quill-and-ink diplomacy. On the contrary, embracing human-centered cooperation means using every innovative tool at our disposal, but doing so in service of human aims and guided by human values. The world’s challenges in 2025, from cross-border conflicts and climate disruptions to cyber risks and pandemics, are undeniably complex. Technical fixes alone won’t solve them. We need empathic diplomats who can understand an adversary’s fears, visionary leaders who inspire trust across cultures, and broad-minded negotiators who see humanity’s common future as worth fighting for. Technology can assist these people, giving them better information, amplifying their reach, connecting them in novel ways, but it cannot replace their judgment and heart.

As we shape the future of international cooperation, the motto should be “humans at the helm.” We can design AI to flag early warnings of crises, but deciding how to respond to a brewing conflict or a biological threat is a profoundly human choice that involves ethics and courage. We can build platforms to coordinate action across continents, but getting sovereign nations to actually share resources or compromise on a deal requires trust built through face-to-face rapport. We can generate oceans of data, but making sense of it, and ensuring policies are fair and just, takes human deliberation and accountability. In short, empathy, trust-building, and moral imagination are the true currency of global cooperation, even in a high-tech age.

The good news is that many policymakers, academics, and technologists themselves recognize this imperative. Initiatives around “AI ethics” and “digital trust” are booming, indicating a desire to infuse tech development with human values. Forward-thinking diplomats talk about “augmented diplomacy” using AI as a sophisticated assistant while keeping diplomacy’s soul intact. Across the UN and beyond, there’s a call to renew multilateralism by making it more inclusive and responsive to people’s needs. These trends all point toward an international system that is smarter not just in code, but in character.

Ultimately the choice is not humans versus machines; it is whether we align machines to human purposes and public accountability. International cooperation is a collective act of will, grounded in dignity, reciprocity, and the capacity to recognize one another across difference. AI can accelerate that work by widening participation, translating across languages, surfacing options, and stress-testing scenarios. But the legitimacy to choose among those options rests with people and institutions answerable to them, not with private platforms or celebrity technologists.

A human-centered diplomacy keeps humans at the helm and uses AI as instrumentation: fast analysis in service of slower judgment; better foresight in service of deeper listening. The rules that govern these tools must be set through transparent, plural, and democratic processes, not delegated to any single firm or founder. If we maintain that orientation, our most powerful technologies will remain infrastructure for cooperation rather than substitutes for it.

The future of global problem-solving will be written as much in the language of diplomacy, respect, dialogue, restraint, as in code and protocols. By prioritizing human capabilities and letting technology support rather than supplant them, we improve our odds of closing divides and tackling crises no nation can solve alone. In the end, progress will depend less on algorithms than on empathy disciplined by accountable individuals, collectives, and institutions.

This piece really made me think about how, even for someone who loves AI and algorithms, it's so clear that the 'metacrisis' you describe demands a focus on human qualities like empathy and shared values, not just as alternativs, but as the very fondation for designing any tech that truly helps global cooperation.